I’m Vaidotas (Vai), and with Francesco, we are co-founders of Doqume.

We use AI to create knowledge bases and tools for research that help knowledge workers optimize their search for data and insights.

We started working on Doqume because we believe knowledge workers are confronting poorly organized knowledge with search solutions that are too basic. We believe there is a better way.

There are roughly 780 million knowledge workers in the world, who spend 6.5 hours a day in front of a computer and 19% of their workdays are devoted to the activity of searching for data and information. The average knowledge worker has to work with:

- large quantities of information

- old knowledge being replaced by new information at a fast pace

- knowledge stored in silos and developed vertically rather than integrated horizontally

- keyword-based search rather than semantically enabled

- need to read (pretty obvious, uh?). Still forced to read even when information can be extracted

Natural Language Processing (NLP) and Machine Learning (ML) can resolve some of these problems, but while those technologies are emerging in most sectors, knowledge workers don’t seem to have access to them to solve their problems.

This is why we are focusing on creating a single place for the research of canonical knowledge. One that integrates various open data sets, multiple open encyclopedias, open online communities, and individual or corporate-owned knowledge bases.

A human knowledge-map glued by semantic technology and machine learning to provide a central place of reference for doing research in knowledge-intensive organizations.

The challenge

We spend so much time researching data, information, and insights. No surprise there. But if the problem is so relevant to our productivity, how come there’s no wide-spread adoption of technologies to address the problem?

Unfortunately, when it comes to creating comprehensive research resources, technical elements prove troublesome. Some of the key challenges:

- Building a taxonomy (hierarchy that classifies things or concepts in a domain of interest) is necessary, as it allows classification of content in meaningful categories to improve retrieval and accessibility.

- Integrating external data sets can help augment what is available from resources internal to an organization, but as the number of data sets increases, their integration becomes a painful process, too costly to perform manually, and difficult to automate.

- Keeping a knowledge base up to date is especially important in fields where information changes rapidly, such as cellular agriculture, genetics, competitive intelligence, investment, etc… This makes knowledge bases quickly obsolete if they are not maintained to reflect these changes.

- Extracting insights from unstructured text is something that can replace reading or skimming in cases when the type of information being searched is either semi-structured or well defined. Technological solutions exist but require interlinked knowledge of NLP and ML.

Across the past year of research and development, we have focused on resolving some of the above issues with the aim of developing a comprehensive resource for knowledge workers.

Below are some of the aspects we’ve focused on so far.

Leverage data sets in search

The idea of abstraction of search originally struck us after a prospect, an editorial magazine, asked for a search functionality that would abstract a search entity to its upper taxonomical category.

His idea was to perform a search for cathedrals, but rather than searching for the word ‘cathedral’ itself, he wanted the search criteria to be populated with names of known cathedrals.

It turns out that an engine capable of abstracting search and analyze results semantically can have various applications.

A possible use case could be to perform a search in the medical records of a group of patients, search for genetic variants thought to be linked with myocardial infarct but of uncertain significance, and return any symptoms that those patients have been experiencing based on their past medical records. The study of patterns could help to tell if the variant is pathogenic.

This type of analysis could be particularly useful in fields where information changes fast and often.

There’s no one size fits all solution

Some of the biggest frustrations we’ve observed using search engines (Google included) are (1) the difficulty to perform searches that go outside the de facto standard keyword search, and (2) the challenge with extracting meaningful information from text without having to read each sentence in every article.

This frustration has manifested countless times in my own work. I would spend most days researching AI models. The main idea was for me to find companies that employed a specific model and learn from their use case.

In order to research NLP companies, I had to resort to verbose queries, typing in synonyms, acronyms, advanced operators. And many re-writes after I would finally have the list of relevant pages. At which point I had to read every line in each article to find the name of those companies.

What I wanted, instead, was a search that would take into account synonyms, acronyms and grammatical variations of the word, and, once a list of pages was returned, I wanted to automatically extract the names of companies mentioned in each article.

Resolving that problem with Doqume was pretty much the beginning of our Research Engine. Since then, we’ve also figured there’s very cool stuff we can do with those entities once we find them, but that will be for another article.

You might be wondering, why use an engine when you can access this type of data in a structured manner from services like Crunchbase, CB

Those services can have varying levels of accuracy, but the rule of thumb is that the better they are the more curated they are. Curation requires manual efforts and that’s reflected in the final cost of the product.

The pre-built report VS the means for researching on your own terms

There are dozens of companies in every sector whose core business is data. Some of those like Crunchbase and CB lnsights are known as Data as a service businesses. They build the algorithm, unleash it on thousands of websites, scrape a mass of data that makes partial sense, clean it up, make sense of it, and generate it into a structured format.

This industry’s core business is in its semi-automated work of data mining, aggregation, maintenance, and sometimes creation, of pre-fabricated market reports or insights.

Data as a service solutions might not be available, or viable, if:

- data is about a niche market and there’s no service covering that market

- the insight is very specific or not of general interest

- access to that data is closed, or limited to you, or your organization

- data or insight has a short expiry date

- data is openly available, not worth investing in a subscription

As we mentioned above, NLP and ML can resolve some of these problems. Those technologies are emerging, yet, we as knowledge workers don’t seem to have a middle ground between a pre-populated report and a keyword-based search engine.

Buried knowledge

Human produced content is for the most part unstructured. While this content is accessible to people, it is not as easily accessible to machines. This limits what can be done with it.

Unstructured data has huge potential, but you won’t be able to query or aggregate insights without building interlinked NLP and ML models.

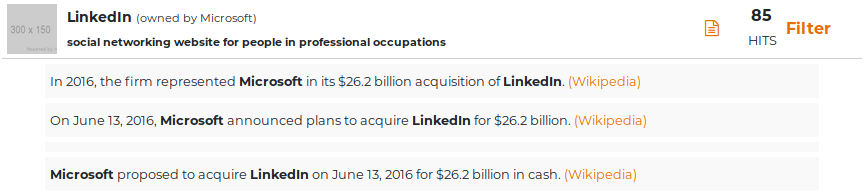

Consider the sentence “Microsoft has acquired LinkedIn”: once “Microsoft” and “LinkedIn” are identified as entities that represent two companies, the insight behind this sentence is that Microsoft is LinkedIn’s parent company.

The Wikipedia experiment

To exemplify the value of buried knowledge we have performed an experiment using two Wikimedia foundation projects: Wikipedia (the digital encyclopedia) and Wikidata (a repository of structured knowledge that just like Wikipedia anyone can contribute to).

The experiment consisted in counting how many insights can be extracted from Wikipedia pages that were not in Wikidata.

Since Wikipedia and Wikidata are parallel projects by the same organization, it would be reasonable to expect that information available in one would reflect data present in the other.

For example, if the Wikipedia page of Microsoft states LinkedIn is of Microsoft’s ownership, you’d expect the same information to be in Wikidata – mostly because mapping knowledge between Wikipedia and Wikidata is easier than having users produce that knowledge manually twice.

For our experiment, we limited to develop a ML model to extract a single set of data types: parent-child relationships between companies (such as Microsoft and Linkedin) and industry of operation (e.g., Microsoft is a software development company).

Results

At the time Wikidata had roughly 100,000 data-points of this type (parent-child company relationships, and industry data). Doqume was able to extract, with high precision, over 40,000 new data-points available in Wikipedia but not known to Wikidata, accounting to a 40% of their knowledge base.

This result tells two stories: 1. there’s a known discrepancy between type and amount of data available under the two Wikimedia projects. 2. the fact that this data was left dormant in Wikipedia, instead of being populated into Wikidata, is an indication of the technical challenge behind building an engine that enables the extraction of insights.

Help us learn your challenges with research

The above are some examples of how we operate at Doqume, but there’s much more we’ll share in future posts.

We are currently working with professionals in very different fields, from science to investment. We’d love to hear your feedback, and learn what is your experience with research in your field – please do get in touch!